Publications

Qizhe Zhang, Mengzhen Liu, Lichen Li, Ming Lu, Yuan Zhang, Junwen Pan, Qi She, Shanghang Zhang†

Arxiv 2025 [Paper] [Code] [Website]

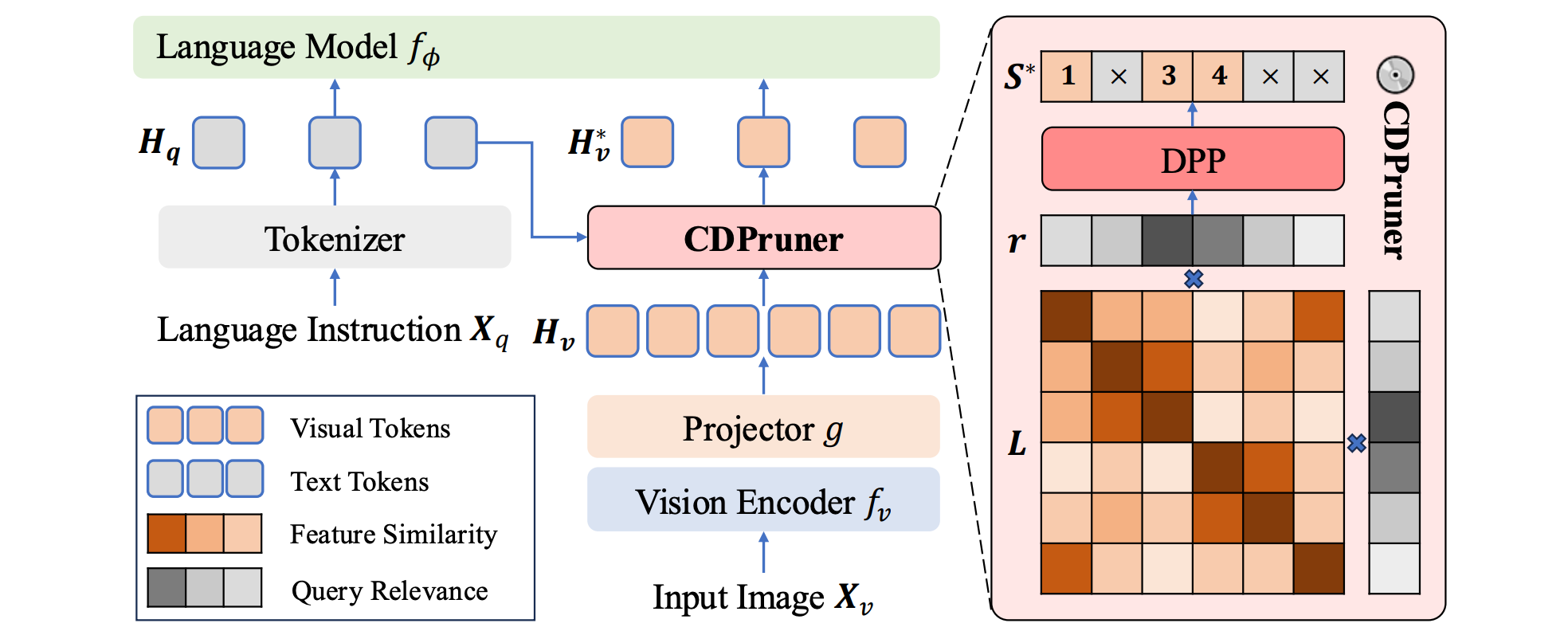

" We propose CDPruner as A training-free and model-agnostic visual token pruning method for MLLM inference acceleration by maximizing the conditional diversity of retained tokens. "

Qizhe Zhang, Aosong Cheng, Ming Lu, Renrui Zhang, Zhiyong Zhuo, Jiajun Cao, Shaobo Guo, Qi She, Shanghang Zhang†

ICCV 2025 [Paper] [Code] [Website]

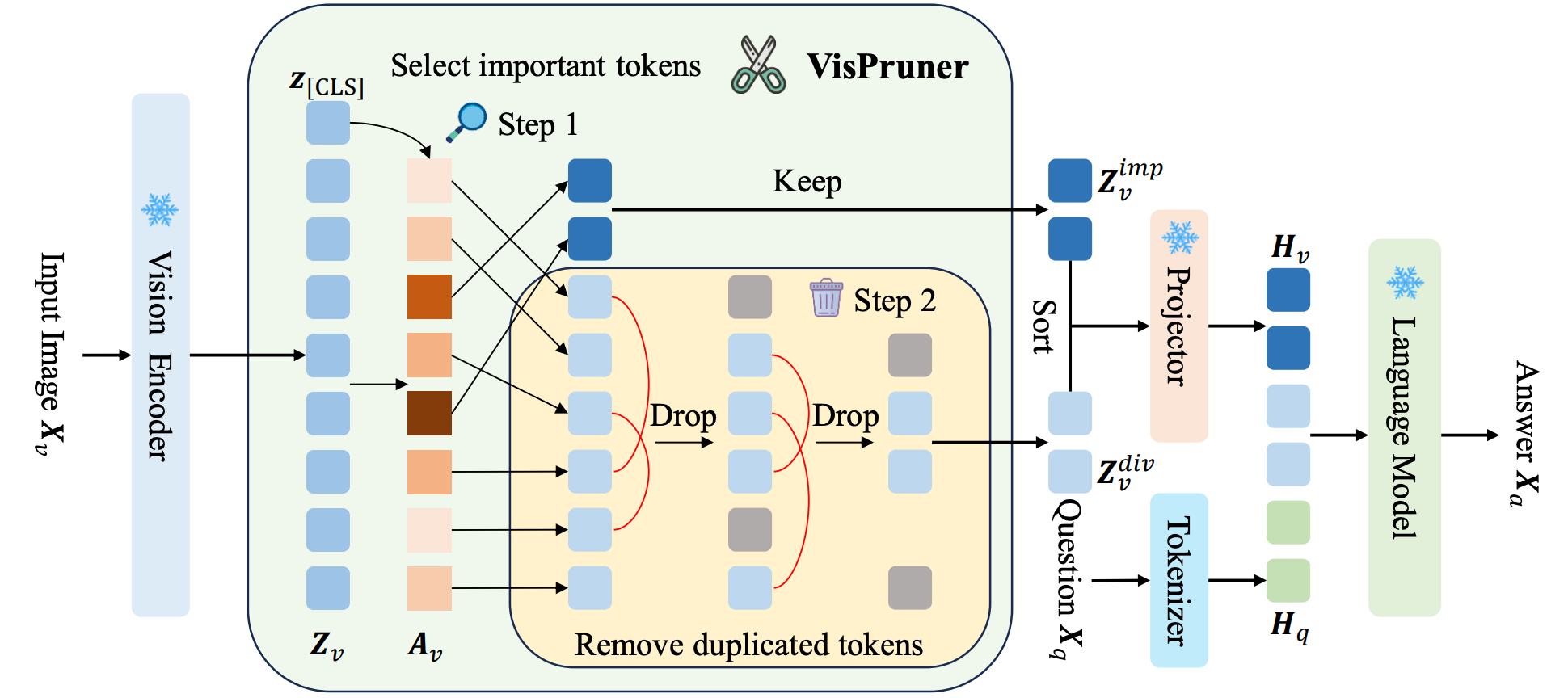

" We propose VisPruner as a plug-and-play method that utilizes visual cues for more effective token pruning in large vision language models. "

Zhi Zhang*, Qizhe Zhang*, Zijun Gao, Renrui Zhang, Ekaterina Shutova, Shiji Zhou, Shanghang Zhang†

CVPR 2024 [Paper] [Code]

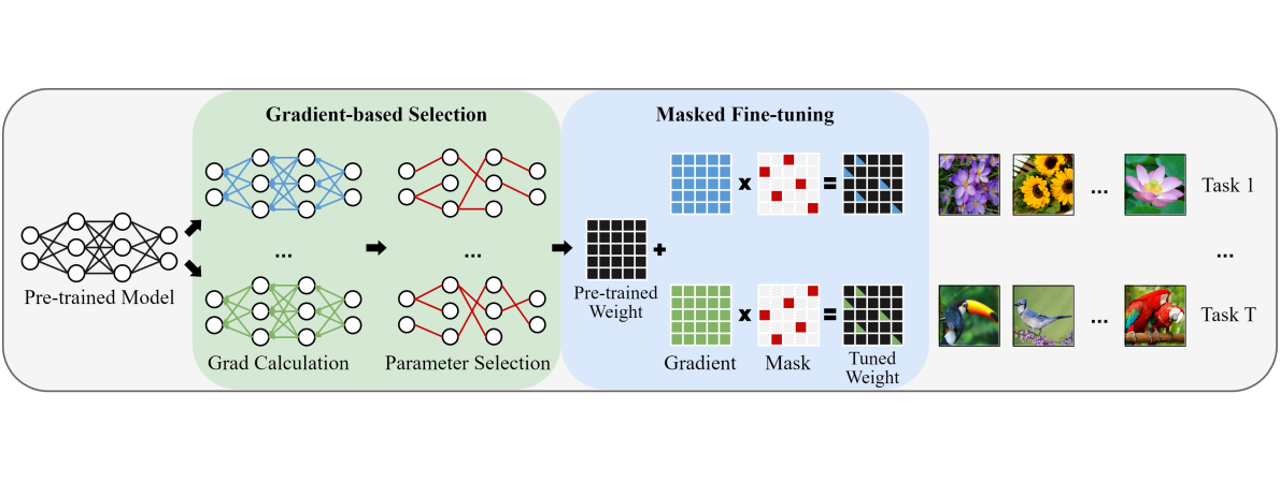

" We propose a novel gradient-based parameter selection (GPS) method for effeicient fine-tuning. GPS does not introduce any additional storage or computational cost during both training and inference stages. Moreover, it possesses model-agnostic and task-adaptive properties, achieving outstanding performance. "

Jiaming Liu*, Qizhe Zhang*, Jianing Li, Ming Lu, Tiejun Huang, Shanghang Zhang†

ICRA 2024 [Paper] [Code]

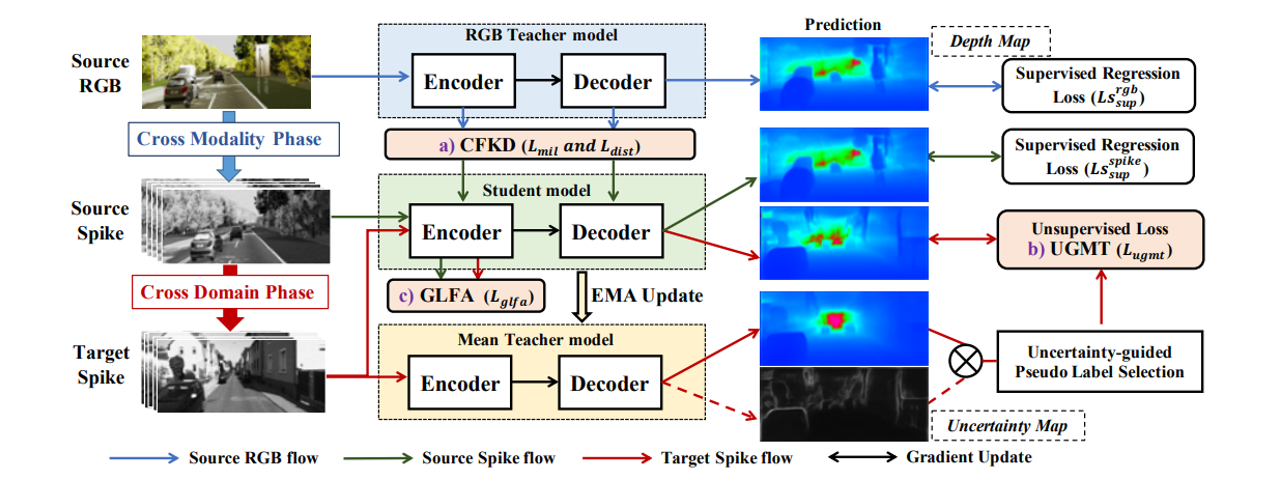

" We propose a novel cross-modality cross-domain (BiCross) framework for unsupervised spike depth estimation. To be mentioned, we are the first to exploit the opensource RGB datasets to help unsupervised learning for spike depth estimation. "

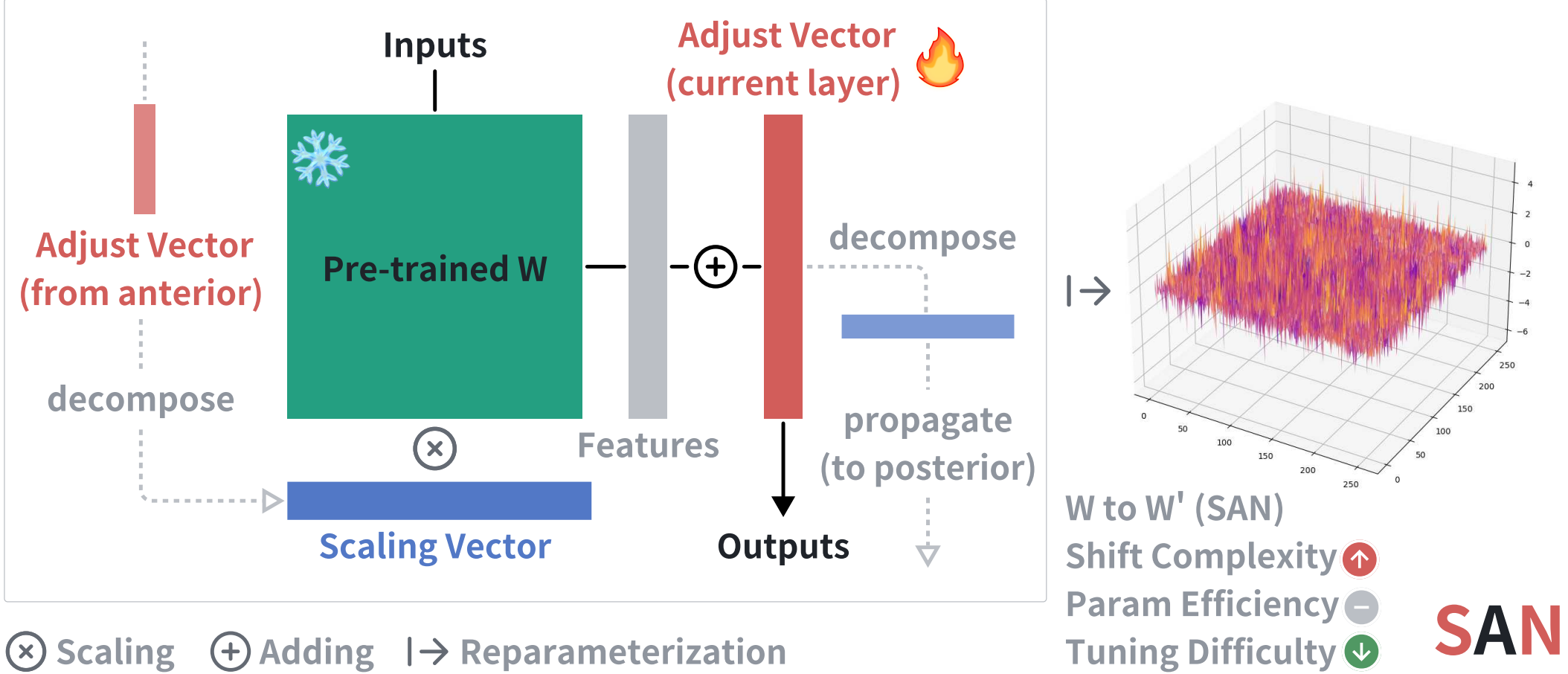

Gaole Dai, Yiming Tang, Chunkai Fan, Qizhe Zhang, Zhi Zhang, Yulu Gan, Chengching Tseng, Shanghang Zhang† Tiejun Huang,

ICML 2025 [Paper] [Code]

" We propose Synapse and Neuron (SAN), which decomposes and propagates scaling components from anterior feature adjusting vectors towards posterior weight matrices. SAN is theoretically grounded in Long-Term Potentiation/Depression phenomena, which govern synapse development through neurotransmitter release modulation. "

Jiajun Cao, Yuan Zhang, Tao Huang, Ming Lu, Qizhe Zhang, Ruichuan An, Ningning Ma, Shanghang Zhang†

CVPR 2025 [Paper] [Code]

" We propose Mixture-of-Visual-Encoder Knowledge Distillation (MoVE-KD), a novel framework that distills the unique proficiencies of multiple vision encoders into a single, efficient encoder model. "

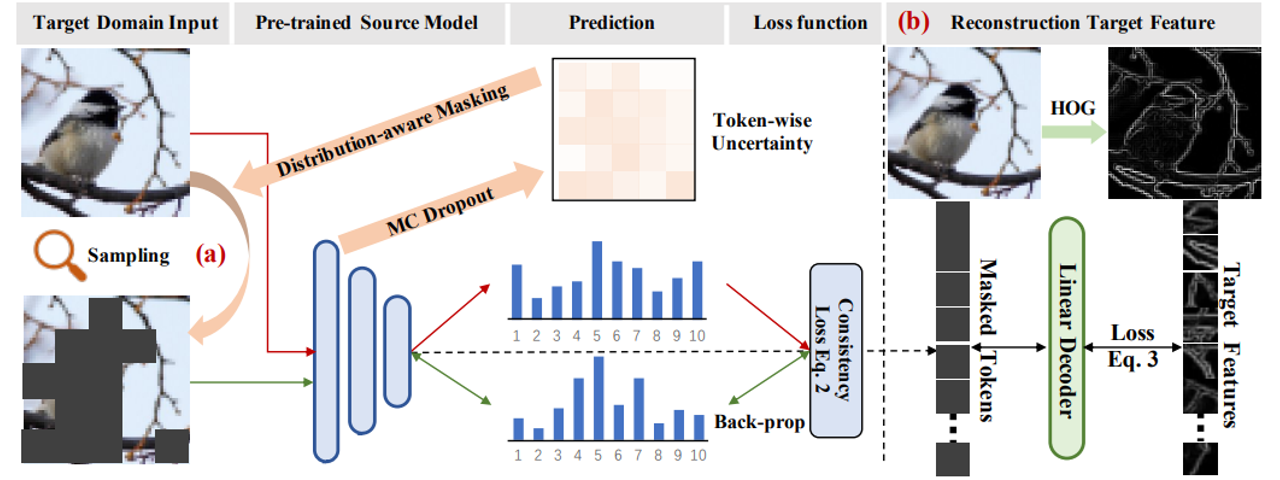

Jiaming Liu*, Ran Xu*, Senqiao Yang*, Renrui Zhang†, Qizhe Zhang, Zehui Chen, Yandong Guo, Shanghang Zhang‡

CVPR 2024 [Paper] [Code] [Website]

" We propose Adaptive Distribution Masked Autoencoders (ADMA) as a novel continual self-supervised method. ADMA enhances the extraction of target domain knowledge while mitigating the accumulation of distribution shifts. "

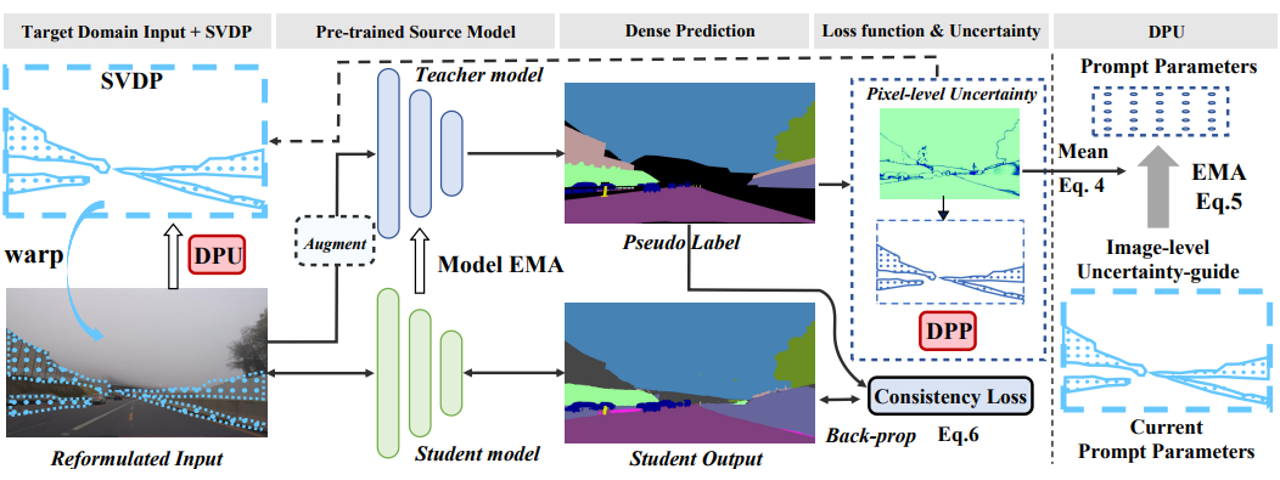

Senqiao Yang*, Jiarui Wu*, Jiaming Liu*, Xiaoqi Li, Qizhe Zhang, Mingjie Pan, Shanghang Zhang†

AAAI 2024 [Paper] [Code] [Website]

" We propose a novel Sparse Visual Domain Prompts (SVDP) approach for dense prediction TTA tasks, which holds minimal trainable parameters in the image-level prompt and reserves more spatial information of the input. "